Automated Kubernetes Rollbacks in Spinnaker

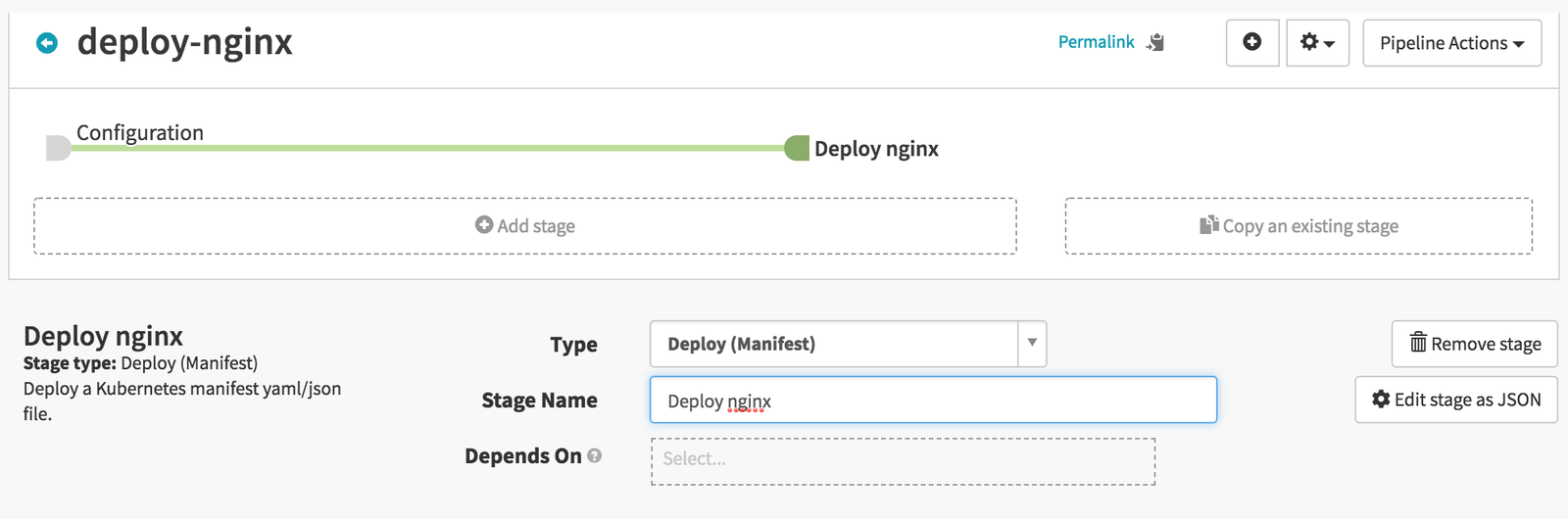

Creating a main deployment pipeline

Let’s start by creating a new pipeline that will deploy a simple nginx container:

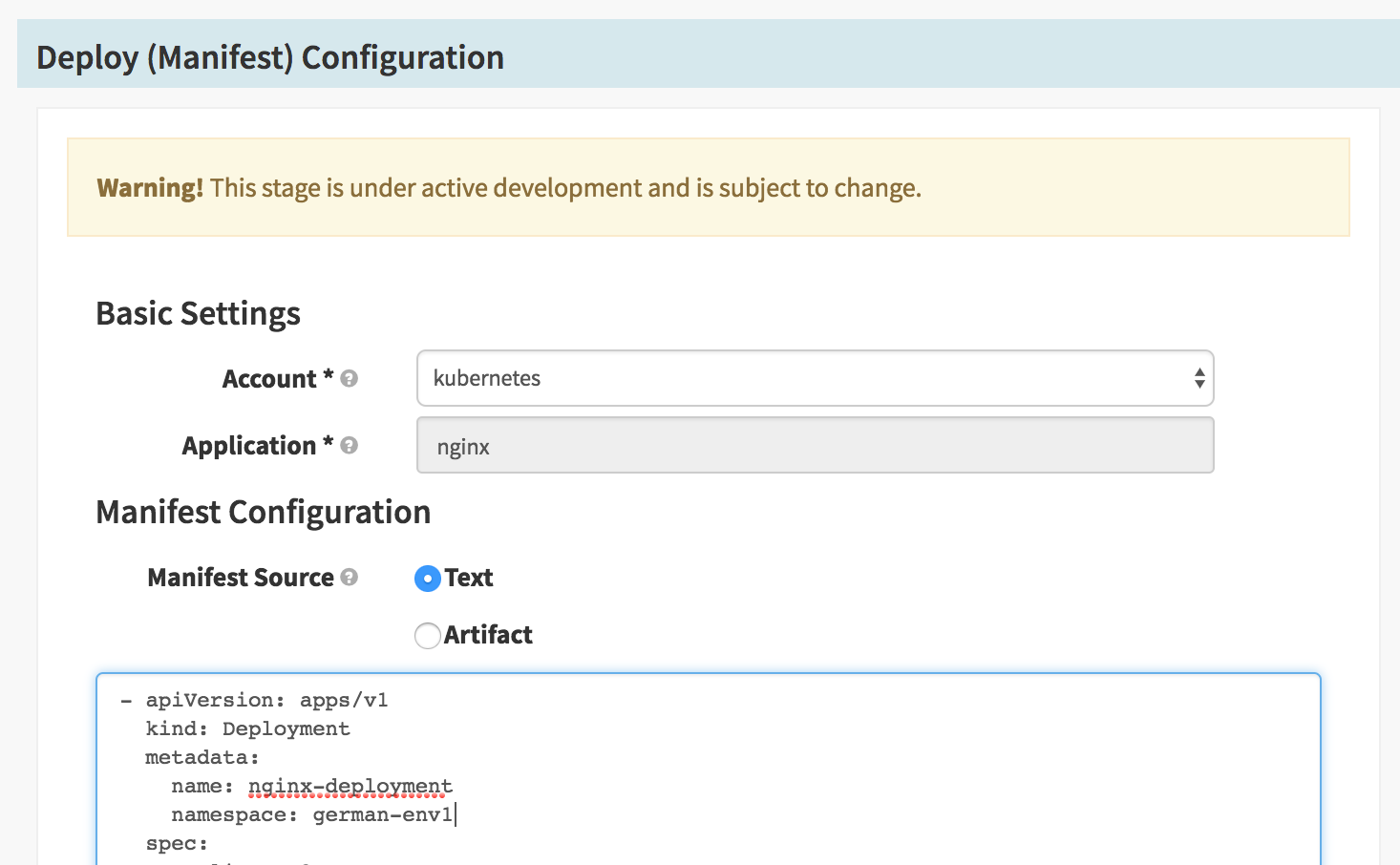

We’ll be supplying the manifest directly in the Deploy (Manifest) stage as text for simplicity, but it can also be taken from a version control system like Github:

This is the sample deployment manifest, which will create two nginx pods in Kubernetes under the “german-env1” namespace (the namespace must exist already):

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: german-env1

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.0

ports:

- containerPort: 80

This is all that is required in the deploy pipeline. We can now save and execute manually the pipeline and it should be successful.

Generating historic deployment versions for testing rollbacks

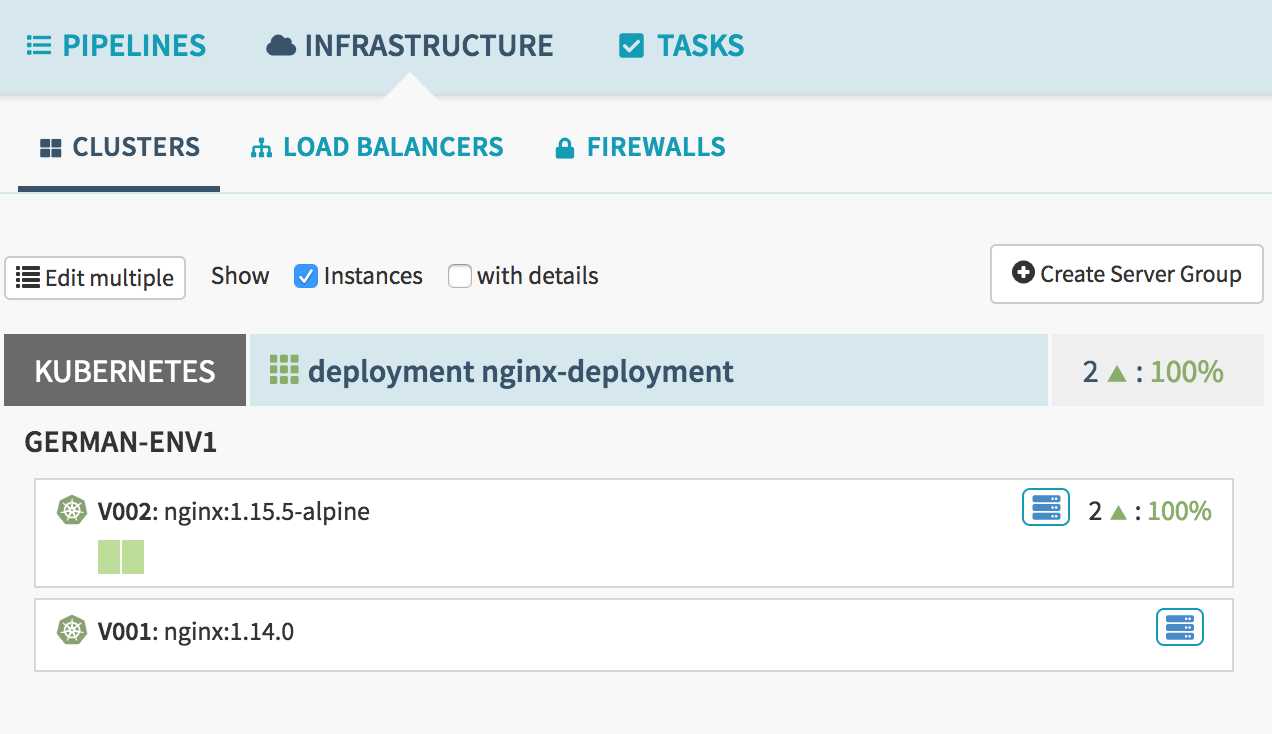

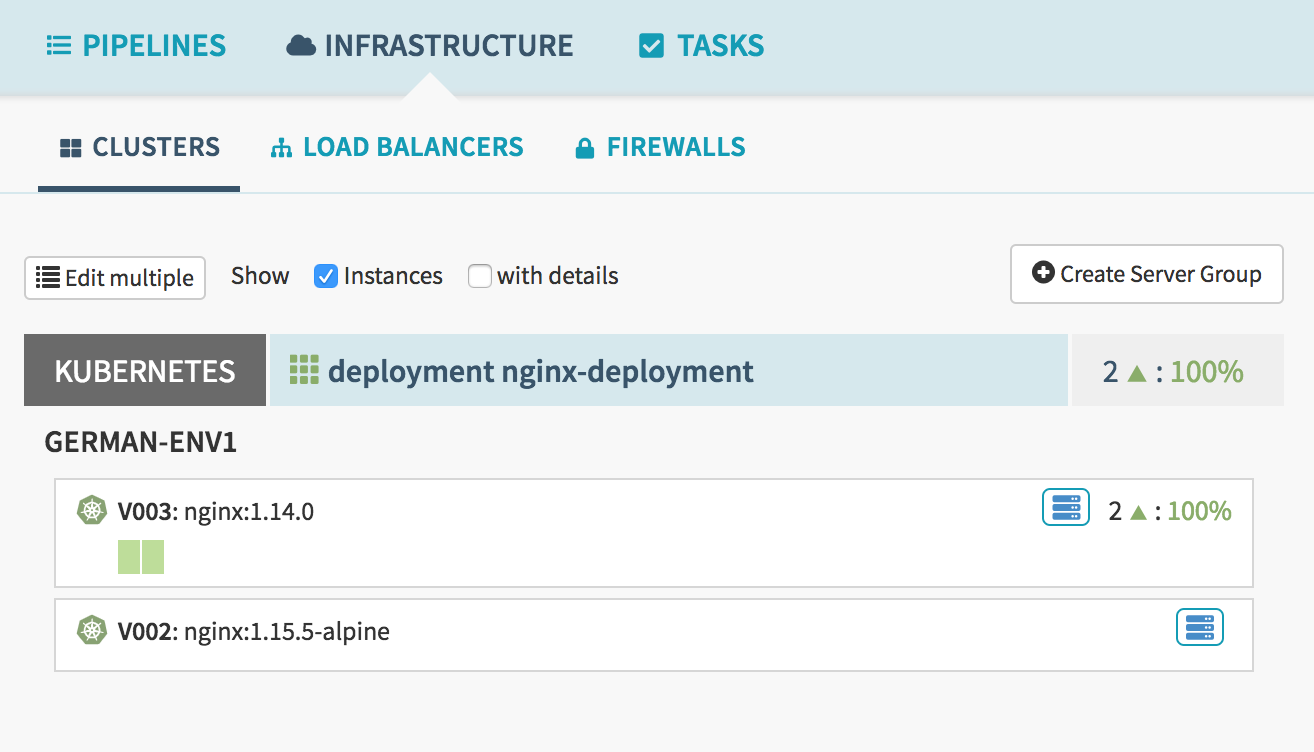

We’ll test rollbacks by making a change to the deployment manifest and running the pipeline again, so that we’ll end up with two historic versions of the deployment. First we’ll edit the manifest to change nginx image version from nginx:1.14.0 to nginx:1.15.05-alpine and then run the pipeline. Now under infrastructure view we’ll see that we have two historic versions of the deployment:

The first version deployed (V001) corresponds to nginx 1.14.0, while the second version (V002) corresponds to nginx 1.15.5-alpine.

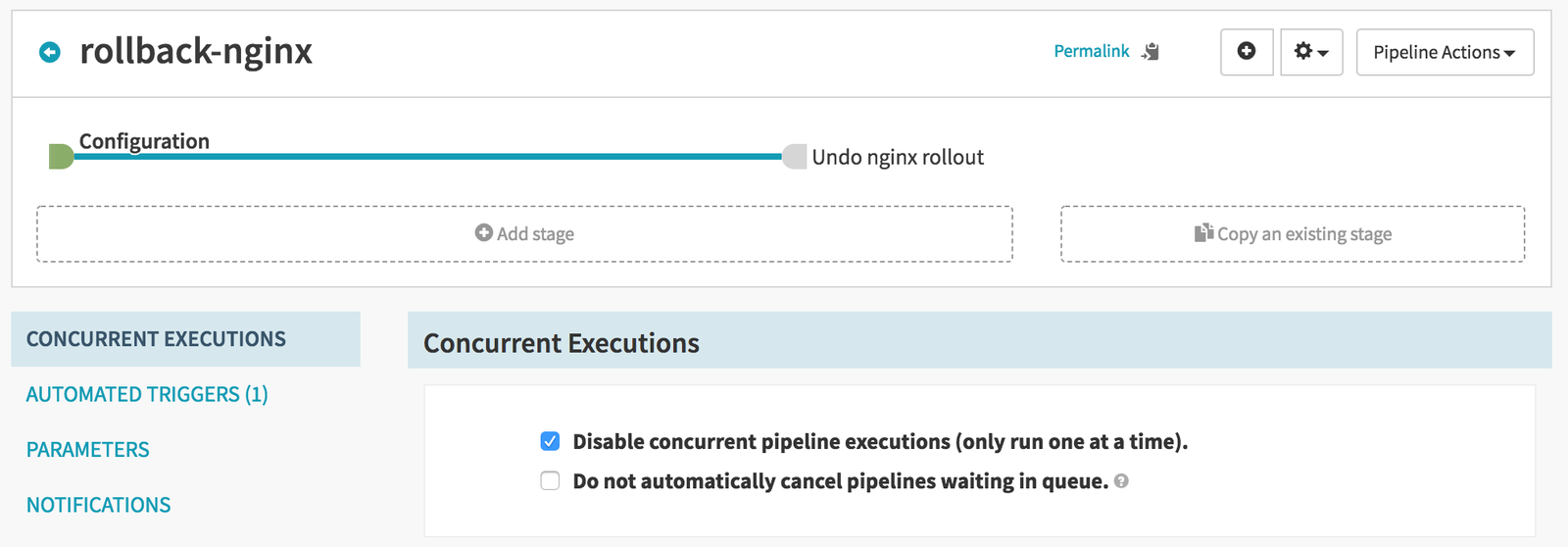

Creating a rollback pipeline

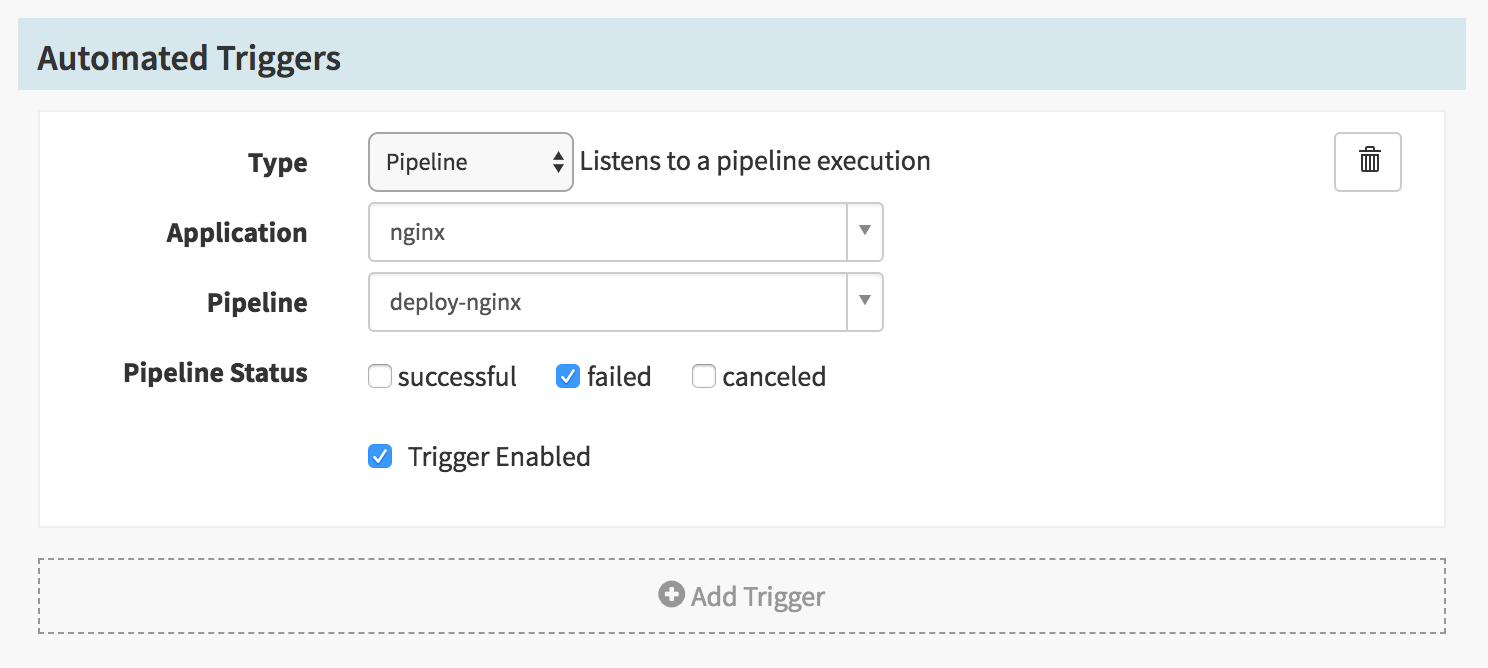

Now we’ll create a rollback pipeline that triggers automatically when deploy-nginx pipeline fails:

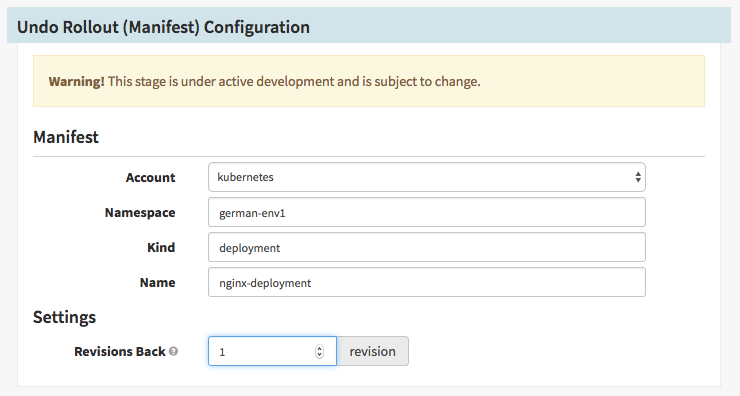

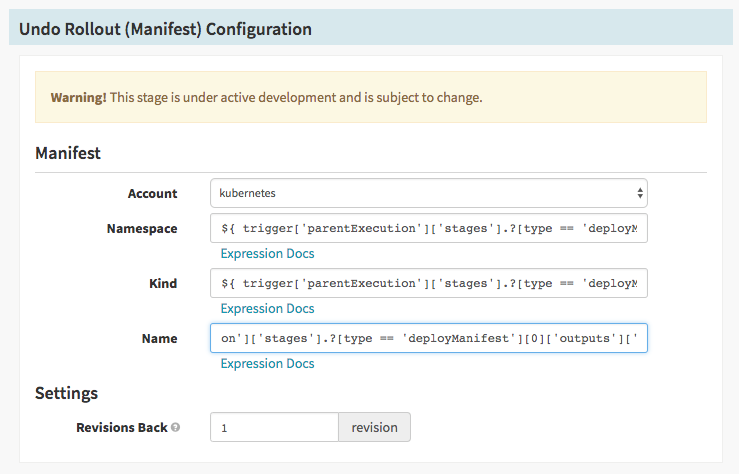

This pipeline just contains a single stage of type Undo Rollout (Manifest) with the following configuration:

This configuration is important, the fields Namespace, Kind and Name are the coordinates of the Kubernetes deployment, and should match what is defined in the deployment manifest on the deploy pipeline. Revisions Back indicate how many deployment versions to rollback. In our historic running we currently have V001 and V002. Each time a deployment occurs, this version is automatically increased. Click here for detailed information on how Undo Rollout (Manifest) stage works.

Testing rollback logic

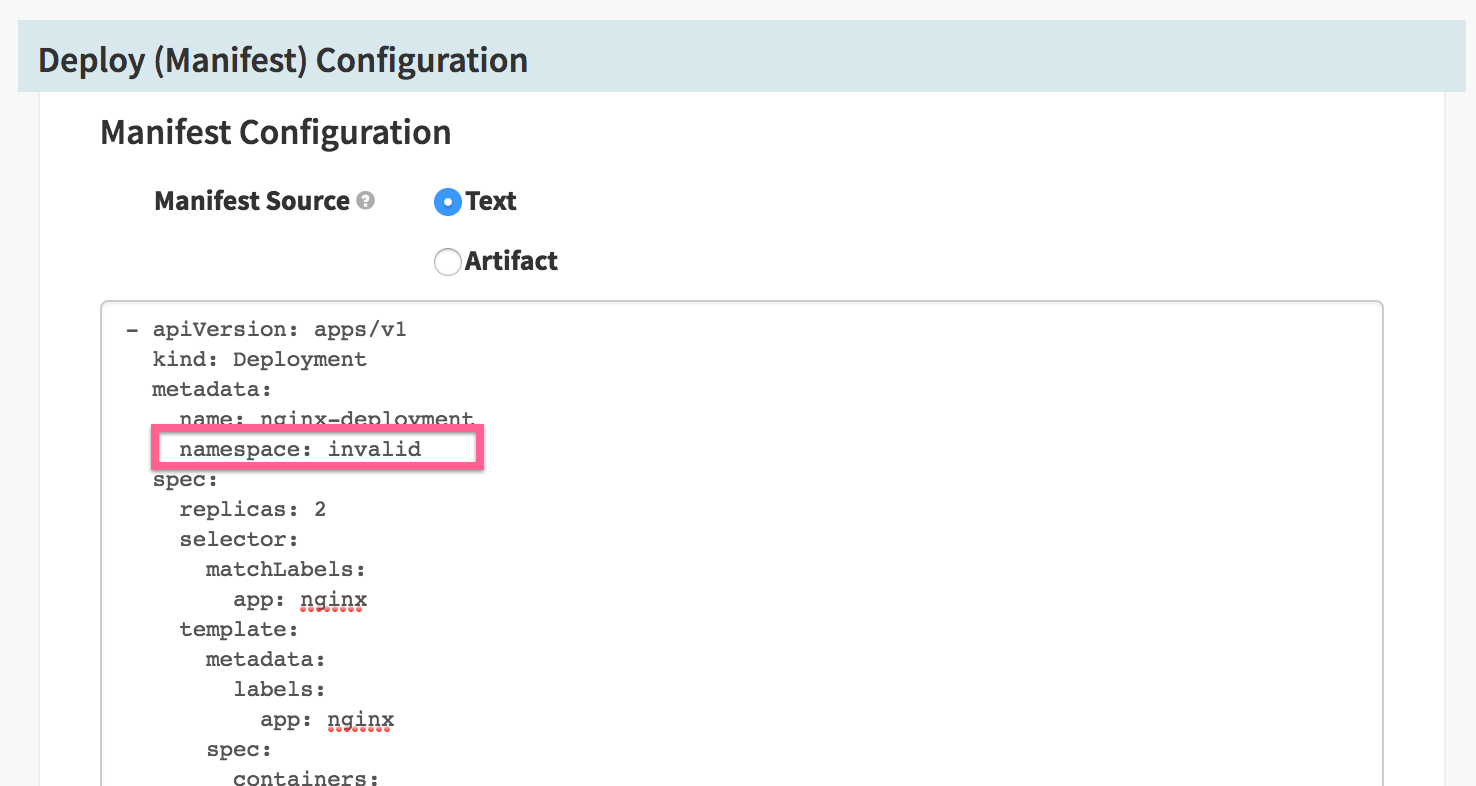

Now we’ll do a rollback test by changing the manifest, providing an invalid value for the namespace to force a failure. In deploy-nginx we’ll change the namespace to an invalid value:

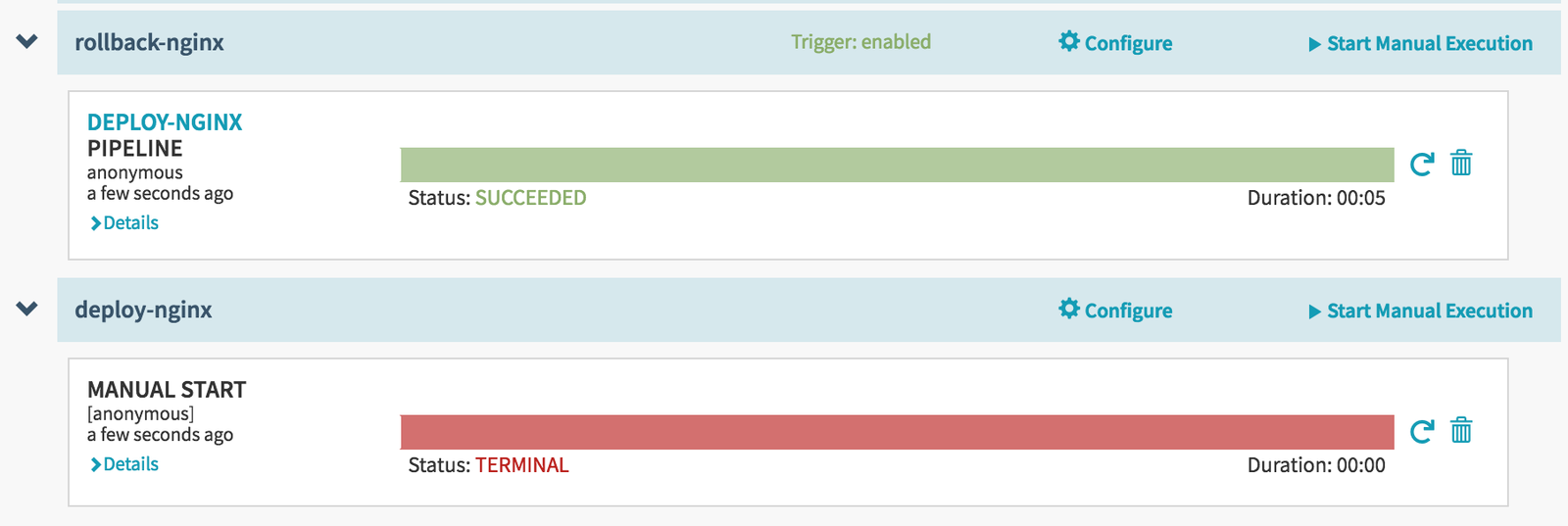

We’ll run the pipeline again to see the rollback pipeline kick in automatically to rollback one version of the deployment:

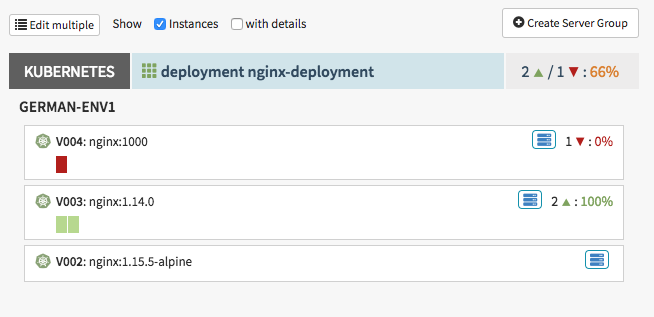

If we go to the infrastructure view we can see that the active deployment was rolled back to the previous version:

In this case, V003 was generated by the rollback operation with the contents of a previous deployment. This is the summary of events:

| Versions before failed deployment | Versions after failed deployment |

|---|---|

| V001 (nginx:1.14.0) | V002 (nginx:1.15.5-alpine) |

| V002 (nginx:1.15.5-alpine) → ACTIVE | V003 (nginx:1.14.0) → ACTIVE |

Here we can see one gotcha of the Undo Rollout (Manifest) stage: it will rollback a version no matter if the failed pipeline deployed the application or not. So make sure that this stage only executes after a successful deployment of the artifact to rollback, and then some other logic marks the pipeline as failed, otherwise this may have the unintended consequence of rolling back a healthy version. One use case of this may be a pipeline that first deploys some configuration changes to Kubernetes, then deploys an updated version of a service. We can have a rollback pipeline execute to rollback the config changes if the main service deployment fails.

What happens if we don’t have a rollback pipeline? In the previous case where the namespace is invalid nothing is deployed and we can end up with a clean state, but if something goes wrong after a deployment we’ll end up with a failed server group. To see this in action let’s change nginx deployment to a non-existent version (1000), disable the rollback pipeline and see how ends up the infrastructure view after a failed deployment:

This is clearly not what we want, although the previous version (V003 - nginx:1.14.0) is still active and running, we have a failed server group (replica set) that cannot be started, so the rollback pipeline is still necessary to clean this up. In the next section we’ll look at a more advanced way for detecting when to apply a rollback.

Advanced configuration: Protecting against unwanted rollbacks

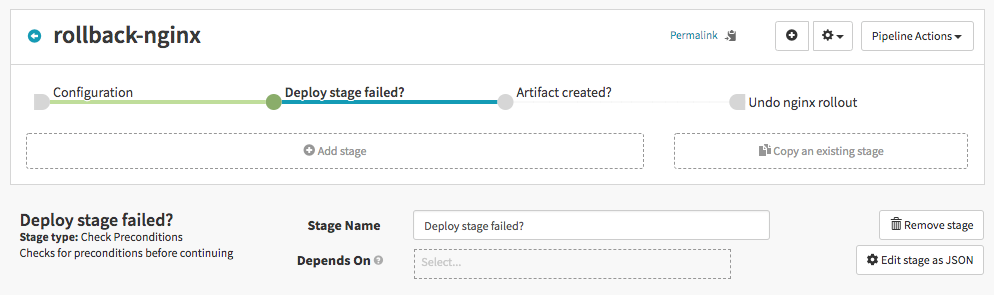

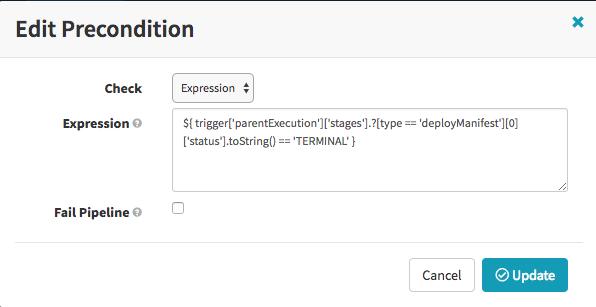

A deployment pipeline can fail because of many different reasons, but a rollback needs to be executed only in case something broken was deployed. One way to detect this is by using conditional checks with Spring Expressions to detect the case where something broken was deployed. In the rollback pipeline we’ll add two more stages of type “Check Preconditions” before the Undo Rollout stage:

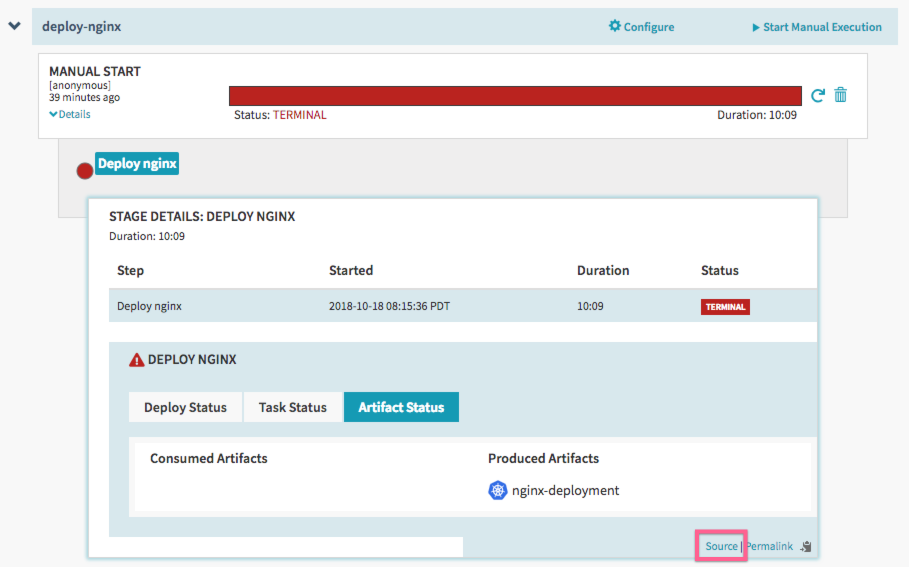

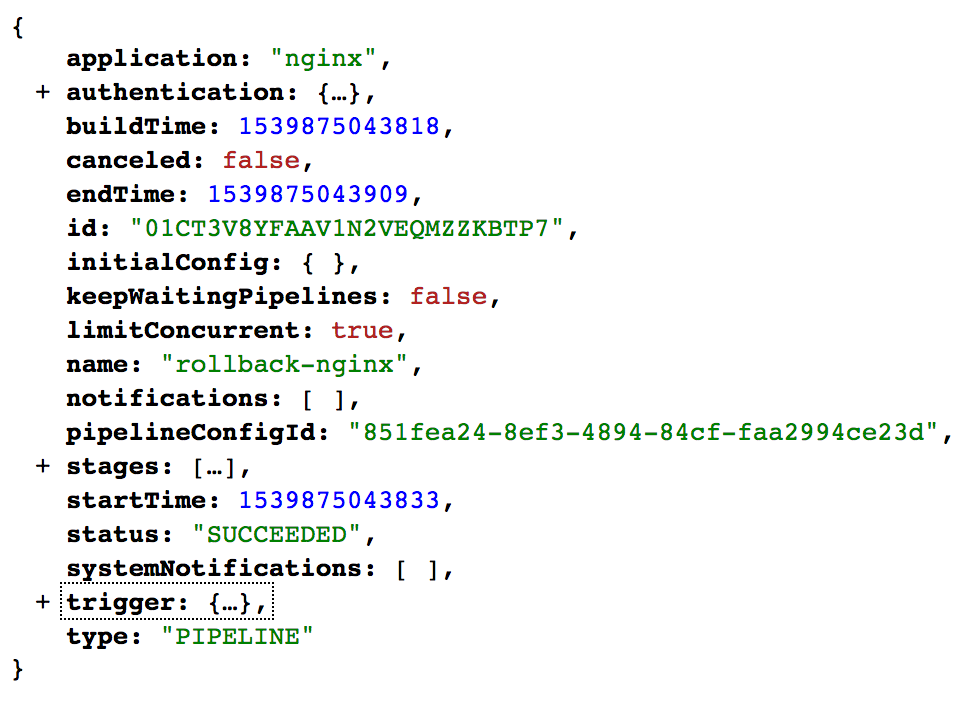

This allow us to write expressions that continue the pipeline only if a broken deployment occurred. Every pipeline execution has a “context” object holding information about all aspects of execution. We can see this information clicking the “Source” link inside the details of a previous execution:

This opens a new tab showing a json similar to this:

We’re looking for two pieces on information inside this json:

- A stage of type

deployManifestin the trigger pipeline execution that failed. - This stage should have created an artifact.

The corresponding Spring Expression for the “Deploy stage failed?” stage is the following:

${ trigger['parentExecution']['stages'].?[type == 'deployManifest'][0]['status'].toString() == 'TERMINAL' }

And for the “Artifact created?” stage:

${ trigger['parentExecution']['stages'].?[type == 'deployManifest'][0]['outputs']['outputs.createdArtifacts'] != null }

In these expressions we are looking into the trigger pipeline execution for the first stage of type deployManifest whose status is TERMINAL (failed stage) AND that created some artifacts. Here’s the full reference documentation for pipeline expressions.

Also we make sure to uncheck “Fail Pipeline”, so that the pipeline doesn’t fail when there’s nothing to rollback, just stops execution. Using Spring Expressions we can also specify the coordinates of the artifact to rollback in Undo Rollout stage, taking them from the above outputs.createdArtifacts object:

Namespace: ${ trigger['parentExecution']['stages'].?[type == 'deployManifest'][0]['outputs']['outputs.createdArtifacts'][0]['location'] }

Name: ${ trigger['parentExecution']['stages'].?[type == 'deployManifest'][0]['outputs']['outputs.createdArtifacts'][0]['name'] }

Kind: ${ trigger['parentExecution']['stages'].?[type == 'deployManifest'][0]['outputs']['outputs.createdArtifacts'][0]['type'].substring('kubernetes/'.length()) }

This allow us to have an Undo Rollout stage a bit more resilient in case the artifact coordinates change:

Now we can repeat the tests and see that the rollback only executes when changing nginx version to a non existent version, but it doesn’t execute if the namespace is invalid, even though both scenarios fail the deployment pipeline.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.