Install Armory Enterprise for Spinnaker Using the Armory Operator

Armory Enterprise requires a license. For more information, contact Armory.

Two types of Kubernetes Operators for installing Spinnaker

- The open source Spinnaker Operator for Kubernetes installs open source SpinnakerTM. You can download the Operator from its GitHub repo.

- The Armory Operator installs Armory Enterprise for Spinnaker. This Operator processes configuration entries for proprietary enterprise features.

Most of the configuration is the same between the open source and proprietary types of Operators.

Advantages of using the Armory Operator

- Manage Armory Enterprise with

kubectllike other applications. - Expose Armory Enterprise via

LoadBalancerorIngress(optional) - Keep secrets separate from your configuration. Store your config in

gitand have an easy GitOps workflow. - Validate your configuration before applying it (with webhook validation).

- Store Spinnaker secrets in Kubernetes secrets.

- Gain total control over Armory Enterprise Platform manifests with

kustomizestyle patching - Define Kubernetes accounts in

SpinnakerAccountobjects and store kubeconfig inline, in Kubernetes secrets, in s3, or GCS (Experimental). - Deploy Armory in an Istio controlled cluster (Experimental)

Requirements for using the Armory Operator

Before you use start, ensure you meet the following requirements:

- Your Kubernetes cluster runs version 1.13 or later.

- You have admission controllers enabled in Kubernetes (

-enable-admission-plugins). - You have

ValidatingAdmissionWebhookenabled in the kube-apiserver. Alternatively, you can pass the--disable-admission-controllerparameter to the to thedeployment.yamlfile that deploys the operator. - You have administrator rights to install the Custom Resource Definition (CRD) for Operator.

Install the Armory Operator

The Armory Operator has two distinct modes:

- Basic: Installs Armory into a single namespace. This mode does not perform pre-flight checks before applying a manifest.

- Cluster: Installs Armory across namespaces with pre-flight checks to

prevent common misconfigurations. This mode requires a

ClusterRole.

-

Get the latest Armory Operator release.

mkdir -p spinnaker-operator && cd spinnaker-operator bash -c 'curl -L https://github.com/armory-io/spinnaker-operator/releases/latest/download/manifests.tgz | tar -xz'Alternately, if you want to install open source Spinnaker, get the latest release of the open source Operator.

mkdir -p spinnaker-operator && cd spinnaker-operator bash -c 'curl -L https://github.com/armory/spinnaker-operator/releases/latest/download/manifests.tgz | tar -xz' -

Install or update CRDs across the cluster.

kubectl apply -f deploy/crds/ -

Create the

spinnaker-operatornamespace.If you want to use a namespace other than

spinnaker-operatorinclustermode, you also need to edit the namespace indeploy/operator/kustomize/role_binding.yaml.kubectl create ns spinnaker-operator -

Install the Operator in either

clusterorbasicmode:clustermode:kubectl -n spinnaker-operator apply -f deploy/operator/kustomizebasicmode:kubectl -n spinnaker-operator apply -f deploy/operator/basic

After installation, you can verify that the Operator is running with the following command:

kubectl -n spinnaker-operator get pods

The command returns output similar to the following if the pod for the Operator is running:

NAMESPACE READY STATUS RESTARTS AGE

spinnaker-operator-7cd659654b-4vktl 2/2 Running 0 6s

Install Armory using Kustomize patches

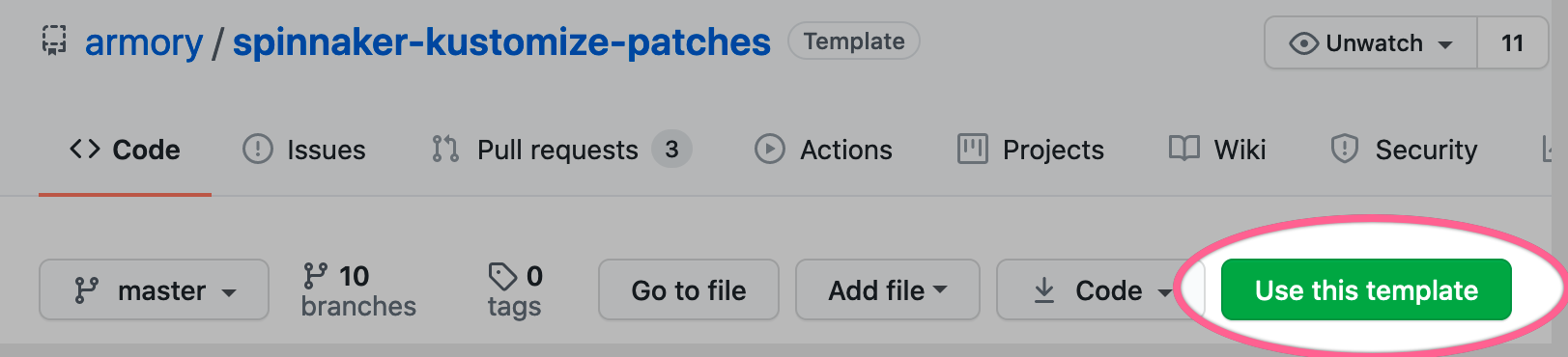

Many common configuration options for Armory and SpinnakerTM are available in the spinnaker-kustomize-patches repository. This gives you a reliable starting point when adding and removing Armory or Spinnaker features to your cluster.

To start, create your own copy of the spinnaker-kustomize-patches repository

by clicking the Use this template button:

Once created, clone this repository to your local machine.

If you installed Operator in basic mode, you must set the namespace field

in your kustomization.yml file to the spinnaker-operator namespace. The

permissions in basic mode are scoped to a single namespace so it doesn’t see

anything in other namespaces.

Once configured, run the following command to install:

# If you have `kustomize` installed:

kustomize build | kubectl apply -f -

# If you only have kubectl installed:

kubectl apply -k .

Watch the install progress and check out the pods being created:

kubectl -n spinnaker get spinsvc spinnaker -w

How configuration works

Armory’s configuration is found in a spinnakerservices.spinnaker.armory.io

Custom Resource Definition (CRD) that you can store in version control. After

you install the Armory Operator, you can use kubectl to manage the lifecycle

of your deployment.

apiVersion: spinnaker.armory.io/v1alpha2

kind: SpinnakerService

metadata:

name: spinnaker

spec:

spinnakerConfig:

config:

# Change the `.x` segment to the latest patch release found on our website:

# https://docs.armory.io/docs/release-notes/rn-armory-spinnaker/

version: 2.25.x

See the full format for more configuration options.

Upgrade Armory Enterprise

To upgrade an existing Armory Enterprise deployment, perform the following steps:

-

Change the

versionfield indeploy/spinnaker/basic/SpinnakerService.ymlfile to the target version for the upgrade. -

Apply the updated manifest:

kubectl -n spinnaker apply -f deploy/spinnaker/basic/SpinnakerService.ymlYou can view the upgraded services starting up with the following command:

kubectl -n spinnaker describe spinsvc spinnaker -

Verify the upgraded version of Spinnaker:

kubectl -n spinnaker get spinsvcThe command returns information similar to the following:

NAME VERSION spinnaker 2.20.2VERSIONshould reflect the target version for your upgrade.

Manage Armory Enterprise instances

The Armory Operator allows you to use kubectl to manager your Armory Enterprise deployment.

List Armory Enterprise instances

kubectl get spinnakerservice --all-namespaces

The short name spinsvc is also available.

Describe Armory Enterprise instances

kubectl -n <namespace> describe spinnakerservice spinnaker

Delete Armory Enterprise instances

kubectl -n <namespace> delete spinnakerservice spinnaker

Manage Armory Enterprise configuration in a Kubernetes manifest

Kustomize

Because Armory Enterprise’s configuration is now a Kubernetes manifest, you can manage SpinnakerService and related manifests in a consistent and repeatable way with Kustomize.

The spinnaker-kustomize-patches repo has many configuration examples.

kubectl create ns spinnaker

kustomize build deploy/spinnaker/kustomize | kubectl -n spinnaker apply -f -

There are many more possibilities:

- managing manifests of MySQL instances

- ensuring the same configuration is used between Staging and Production Spinnaker

- splitting accounts in their own kustomization for an easy to maintain configuration

See this repo for examples of common setups that you can adapt to your needs.

Secret management

You can store secrets in one of the supported secret engine.

Kubernetes Secret

With the Armory Operator, you can also reference secrets stored in existing Kubernetes secrets in the same namespace as Spinnaker.

The format is:

encrypted:k8s!n:<secret name>!k:<secret key>for string values. These are added as environment variable to the Spinnaker deployment.encryptedFile:k8s!n:<secret name>!k:<secret key>for file references. Files come from a volume mount in the Spinnaker deployment.

Custom Halyard configuration

To override Halyard’s configuration, create a ConfigMap with the configuration changes you need. For example, if using secrets management with Vault, Halyard and Operator containers need your Vault configuration:

apiVersion: v1

kind: ConfigMap

metadata:

name: halyard-custom-config

data:

halyard-local.yml: |

secrets:

vault:

enabled: true

url: <URL of vault server>

path: <cluster path>

role: <k8s role>

authMethod: KUBERNETES

Then, you can mount it in the Operator deployment and make it available to the Halyard and Operator containers:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: spinnaker-operator

...

spec:

template:

spec:

containers:

- name: spinnaker-operator

...

volumeMounts:

- mountPath: /opt/spinnaker/config/halyard.yml

name: halconfig-volume

subPath: halyard-local.yml

- name: halyard

...

volumeMounts:

- mountPath: /opt/spinnaker/config/halyard-local.yml

name: halconfig-volume

subPath: halyard-local.yml

volumes:

- configMap:

defaultMode: 420

name: halyard-custom-config

name: halconfig-volume

GitOps

Armory Enterprise can deploy manifests or Kustomize packages. You can configure Armory Enterprise to redeploy itself (or use a separate Armory Enterprise) with a trigger on the git repository containing its configuration.

A change to Armory Enterprise’s configuration follows:

Pull Request –> Approval –> Configuration Merged –> Pipeline Trigger in Spinnaker –> Deploy Updated SpinnakerService

This process is auditable and reversible.

Accounts CRD (Experimental)

The Operator has a CRD for Armory Enterprise accounts. SpinnakerAccount is defined in an object - separate from the main Spinnaker config - so its creation and maintenance can be automated.

To read more about this CRD, see SpinnakerAccount.

Migrate from Halyard to the Armory Operator

If you have a current Armory instance installed with Halyard, use this guide to migrate existing configuration to Operator.

The migration process from Halyard to Operator can be completed in 7 steps:

-

To get started, install Armory Operator.

-

Export Armory configuration.

Copy the desired profile’s content from the

configfileFor example, if you want to migrate the

defaulthal profile, use the followingSpinnakerServicemanifest structure:currentDeployment: default deploymentConfigurations: - name: default <CONTENT>Add

<CONTENT>in thespec.spinnakerConfig.configsection in theSpinnakerServicemanifest as follows:spec: spinnakerConfig: config: <<CONTENT>>Note:

configis under~/.halMore details on SpinnakerService options on

.spec.spinnakerConfig.configsection -

Export Armory profiles.

If you have configured Armory profiles, you need to migrate these profiles to the

SpinnakerServicemanifest.First, identify the current profiles under

~/.hal/default/profilesFor each file, create an entry under

spec.spinnakerConfig.profilesFor example, you have the following profile:

$ ls -a ~/.hal/default/profiles | sort echo-local.ymlCreate a new entry with the name of the file without

-local.yamlas follows:spec: spinnakerConfig: profiles: echo: <CONTENT>More details on SpinnakerService Options in the

.spec.spinnakerConfig.profilessection -

Export Armory settings.

If you configured Armory settings, you need to migrate these settings to the

SpinnakerServicemanifest also.First, identify the current settings under

~/.hal/default/service-settingsFor each file, create an entry under

spec.spinnakerConfig.service-settingsFor example, you have the following settings:

$ ls -a ~/.hal/default/service-settings | sort echo.ymlCreate a new entry with the name of the file without

.yamlas follows:spec: spinnakerConfig: service-settings: echo: <CONTENT>More details on SpinnakerService Options on

.spec.spinnakerConfig.service-settingssection -

Export local file references.

If you have references to local files in any part of the config, like

kubeconfigFile, service account json files or others, you need to migrate these files to theSpinnakerServicemanifest.For each file, create an entry under

spec.spinnakerConfig.filesFor example, you have a Kubernetes account configured like this:

kubernetes: enabled: true accounts: - name: prod requiredGroupMembership: [] providerVersion: V2 permissions: {} dockerRegistries: [] configureImagePullSecrets: true cacheThreads: 1 namespaces: [] omitNamespaces: [] kinds: [] omitKinds: [] customResources: [] cachingPolicies: [] oAuthScopes: [] onlySpinnakerManaged: false kubeconfigFile: /home/spinnaker/.hal/secrets/kubeconfig-prod primaryAccount: prodThe

kubeconfigFilefield is a reference to a physical file on the machine running Halyard. You need to create a new entry infilessection like this:spec: spinnakerConfig: files: kubeconfig-prod: | <CONTENT>Then replace the file path in the config to match the key in the

filessection:kubernetes: enabled: true accounts: - name: prod requiredGroupMembership: [] providerVersion: V2 permissions: {} dockerRegistries: [] configureImagePullSecrets: true cacheThreads: 1 namespaces: [] omitNamespaces: [] kinds: [] omitKinds: [] customResources: [] cachingPolicies: [] oAuthScopes: [] onlySpinnakerManaged: false kubeconfigFile: kubeconfig-prod # File name must match "files" key primaryAccount: prodMore details on SpinnakerService Options on

.spec.spinnakerConfig.filessection -

Export Packer template files (if used).

If you are using custom Packer templates for baking images, you need to migrate these files to the

SpinnakerServicemanifest.First, identify the current templates under

~/.hal/default/profiles/rosco/packerFor each file, create an entry under

spec.spinnakerConfig.filesFor example, you have the following

example-packer-configfile:$ tree -v ~/.hal/default/profiles ├── echo-local.yml └── rosco └── packer └── example-packer-config.json 2 directories, 2 filesYou need to create a new entry with the name of the file following these instructions:

- For each file, list the folder name starting with

profiles, followed by double underscores (__) and at the very end the name of the file.

spec: spinnakerConfig: files: profiles__rosco__packer__example-packer-config.json: | <CONTENT>More details on SpinnakerService Options on

.spec.spinnakerConfig.filessection - For each file, list the folder name starting with

-

Validate your Armory configuration if you plan to run the Operator in cluster mode.

kubectl -n <namespace> apply -f <spinnaker service manifest> --dry-run=serverThe validation service throws an error when something is wrong with your manifest.

-

Apply your SpinnakerService:

kubectl -n <namespace> apply -f <spinnaker service>

Uninstall the Armory Operator

Uninstalling the Armory Operator involves deleting its deployment and SpinnakerService CRD. When you delete the CRD, any Armory installation created by Operator gets deleted. This occurs because the CRD is set as the owner of the Armory resources, so they get garbage collected.

There are two ways in which you can remove this ownership relationship so that Armory is not deleted when deleting the Operator: replacing Operator with Halyard or removing Operator ownership of Armory resources.

Replace Operator with Halyard

First, export Armory configuration settings to a format that Halyard understands:

-

From the

SpinnakerServicemanifest, copy the contents ofspec.spinnakerConfig.configto its own file namedconfig, and save it with the following structure:currentDeployment: default deploymentConfigurations: - name: default <<CONTENT HERE>> -

For each entry in

spec.spinnakerConfig.profiles, copy it to its own file inside aprofilesfolder with a<entry-name>-local.ymlname. -

For each entry in

spec.spinnakerConfig.service-settings, copy it to its own file inside aservice-settingsfolder with a<entry-name>.ymlname. -

For each entry in

spec.spinnakerConfig.files, copy it to its own file inside a directory structure following the name of the entry with double underscores (__) replaced by a path separator. For example, an entry namedprofiles__rosco__packer__example-packer-config.jsonresults inthe fileprofiles/rosco/packer/example-packer-config.json.

When finished, you have the following directory tree:

config

default/

profiles/

service-settings/

After that, move these files to your Halyard home directory and deploy Armory with the hal deploy apply command.

Finally, delete Operator and their CRDs from the Kubernetes cluster.

kubectl delete -n <namespace> -f deploy/operator/<installation type>

kubectl delete -f deploy/crds/

Remove Operator ownership from Armory resources

Run the following script to remove ownership of Armory resources, where NAMESPACE is the namespace where Armory is installed:

NAMESPACE=

for rtype in deployment service

do

for r in $(kubectl -n $NAMESPACE get $rtype --selector=app=spin -o jsonpath='{.items[*].metadata.name}')

do

kubectl -n $NAMESPACE patch $rtype $r --type json -p='[{"op": "remove", "path": "/metadata/ownerReferences"}]'

done

done

After the script completes, delete the Armory Operator and its CRDs from the Kubernetes cluster:

kubectl delete -n <namespace> -f deploy/operator/<installation type>

kubectl delete -f deploy/crds/

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.